The End of the Magic Prompt

Good output is not a wish. It is systems work. We stitch steps into chains, add memory, let agents pick tools, and tune models until behavior matches intent. The magic prompt is over. Real craft is designing how the machine thinks, then teaching it.

Prompt Engineering and the Illusion of Instruction

We think we’re giving orders. We’re steering a next-token engine. Prompts work when they mirror patterns the model has seen. Tiny phrasing changes flip outcomes. Guide with short roles, small examples, and simple formats. Verify. Cooperation, not command.

Sorting Words, Clustering Thoughts

We sort words without thinking. Machines can't. They learn to draw boundaries and find themes: spam or not, tickets by topic. Use labels when you need decisions and clusters when you need discovery. Start there. Patterns come first, meaning follows.

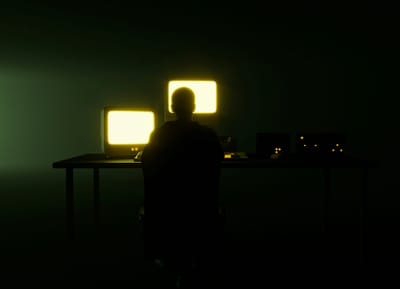

Inside the Clockwork of an AI’s Mind

Ask a question, get a fluent answer. Under the hood, no insight, just a fast loop picking the next token, guided by attention and a tiny memory. See the gears, not a ghost. When you learn the strings, you know when to trust it and when to steer.

Chopping Language, Weaving Meaning

Language models don’t read like we do. They slice text into tokens and map them to vectors. Meaning becomes pattern, not understanding. Learn the quirks of tokenization and embeddings to write tighter prompts, spot bias, and know what gets lost.

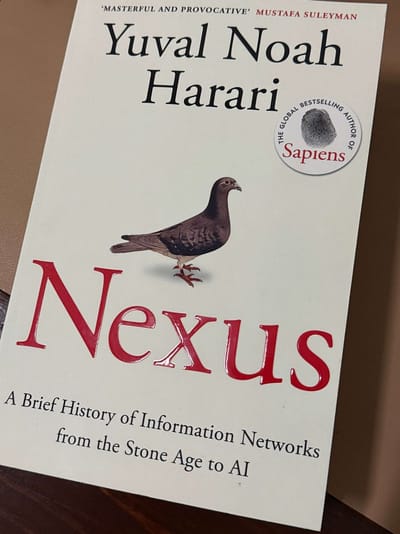

From Bag-of-Words to GPT

We moved from counting words to modeling meaning. LLMs write, code, and summarize, but they also hallucinate and echo bias. Use them as partners, not oracles. Keep your hand on the wheel. In a world where language is cheap, make your thinking rare.

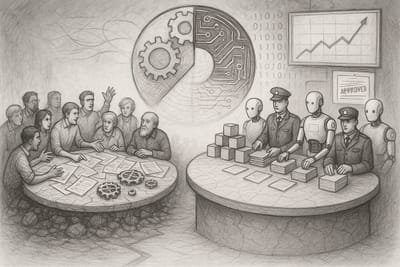

The Algorithmic Throne and the Cacophony of Democracy

How our faith in perfect algorithms threatens real self-correction, and why democracy survives by making room for noise.

The Future is Agents

Autonomous AI agents that plan, reason, and act on their own