The Breakneck Evolution of Large Language Models

There’s a line in computing that keeps shifting, and every few years, we pretend it was always there. For most of the web’s history, “understanding language” meant counting words or matching patterns. If you wanted your machine to “read,” you reached for tools with all the subtlety of a sledgehammer.

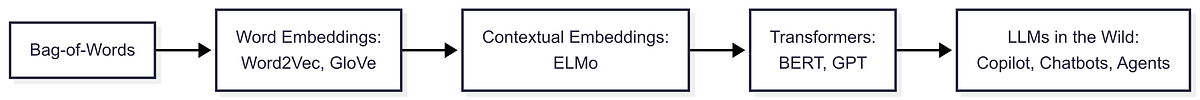

We called it Bag-of-Words. Every document became a crude shopping list: tally the nouns, verbs, and adjectives, and hope the signal outweighed the noise. Not language, just tally marks in a ledger.

For decades, that was enough. It powered search engines, spam filters, and chatbots with canned answers. Progress meant a smarter weighting scheme or a sprinkle of stemming. No one expected computers to actually understand what they read.

But then, in the late 2010s, the ground shifted. We stopped asking, “Which words appear most?” and started asking, “What is this sentence actually saying?” That’s when the idea of large language models quietly detonated in every field that touches text.

How did we get here? And what did we lose or break along the way?

Now, less than a decade later, we expect our software to reason, riff, improvise, and sometimes, outperform us at our own game.

The Age of Counting Words

How Bag-of-Words Ruled, and Failed

If you built software before 2015, you remember the old regime: text wasn’t language. It was data. Every email, search query, or article was just a bag, a shapeless heap of words. “Natural language processing” meant lining up those bags, counting which words overlapped, and pretending that gave you meaning.

A spam filter didn’t know what “Congratulations, you’ve won!” actually meant. It just noticed “congratulations” often traveled with “prize,” “money,” or suspicious links, and it made a guess. Relevance? Sentiment? Context? Forget it. Bag‑of‑Words couldn’t tell “I love cats” from “I don’t love cats” unless you wrote custom logic.

Early search engines lived in this world. So did most chatbots, document classifiers, and recommendation systems. They ran on features like word frequency, TF‑IDF, and, eventually, n‑grams. These methods were cheap, transparent, and predictable. They were also brittle.

If you swapped words, they stumbled. If you rearranged a sentence, meaning collapsed. Sarcasm, negation, ambiguity, all invisible. Computers weren’t parsing English. They were solving crosswords, blindfolded.

For a while, nobody cared. It was good enough for sifting spam or clustering news. But the moment you wanted nuance, any real understanding, Bag‑of‑Words snapped in half. Language is more than a pile of tokens. It’s context, intent, rhythm, contradiction, and surprise. Machines, until recently, couldn’t see any of it.

So, what changed? Three big things: more data, faster hardware, and a new kind of math.

From Vectors to Context

The Breakthrough That Changed Everything

Bag‑of‑Words had one fatal flaw: it saw words as islands. “Bank” meant the same thing in “river bank” and “bank account.” That’s not how people read, but it’s how machines did, until word embeddings arrived.

Word2Vec and GloVe were the first to crack the code. Instead of treating words as disconnected tokens, they mapped each word to a point in high-dimensional space, a vector. Words with similar meanings clustered together: “cat” sidled up to “dog,” and “France” drifted near “Paris.” Suddenly, “king” minus “man” plus “woman” landed you at “queen.” These models didn’t just count. They learned relationships.

But even these breakthroughs missed something big: context. In Word2Vec, “bark” always meant either a dog’s howl or a tree’s skin, but never both. The vector never changed, no matter the sentence.

That changed with models like ELMo and, crucially, transformers. ELMo let word meanings morph depending on their surroundings. But it was Google’s 2017 paper, “Attention Is All You Need,” that detonated the field. The transformer architecture made it possible to read entire sequences (not just words, but phrases, paragraphs, and full documents) and decide, dynamically, what mattered.

This is where the modern language model story really begins. Suddenly, context wasn’t just a nice-to-have. It was the main event.

The GPT Moment

Scale, Surprise, and the Dawn of Reasoning Machines

The leap from clever embeddings to language models that could write, argue, and explain happened faster than anyone (honestly, even the people building them) expected.

OpenAI’s GPT-2 dropped in 2019 and felt like a magic trick. Feed it a prompt, and it replied with text that, more often than not, made sense. Sometimes it rambled or hallucinated facts, but the jump in fluency was unmistakable. For the first time, a machine could riff in English, stringing ideas together across paragraphs, not just sentences.

What changed? Not the core math, but scale. Transformers, it turned out, got dramatically better as you fed them more data and cranked up the parameter count. GPT-2 had 1.5 billion parameters. The next year, GPT-3 pushed that to 175 billion, chugging through almost the entire public internet. That scale wasn’t just incremental. It was a phase change. Suddenly, language models weren’t just good at autocomplete. They started to generalize. You could ask a model to summarize legal contracts, write Python code, or explain the theory of relativity to a child.

It wasn’t “understanding” in the human sense, but it was close enough to unsettle everyone from professors to poets. The world didn’t just get smarter software. It got a new breed of software that blurred the line between tool and collaborator.

The game was on.

LLMs Everywhere

How GPT Changed Work, Code, and Communication

I still remember the first time I saw GitHub Copilot finish my thought before I did. I typed a comment outlining a tricky function, and before I’d written a line of code, the suggestion bar filled in a solution. It was one I might have spent an hour fumbling toward on my own. It felt like cheating and magic, both at once.

By 2022, language models weren’t just a research toy. They were quietly taking over the internet’s daily plumbing. Chatbots stopped sounding like phone menus and started riffing like a bored coworker. Email clients flagged phishing attempts by reading intent, not just headers. Suddenly, “AI writing” was everywhere, from blog posts to code reviews to the scripts for customer service calls.

In developer circles, GitHub Copilot changed how millions wrote code. It didn’t just autocomplete variable names. It generated entire functions, refactored legacy logic, and even explained cryptic error messages. If you’re a software engineer, you’ve probably seen Copilot suggest solutions you never considered, or, let’s be honest, code you wouldn’t dare ship without review.

Legal, medical, and research fields rushed to adapt or defend. Lawyers began automating contract summaries. Scientists asked models to extract patterns from journals. Sometimes, the models got things wrong (sometimes hilariously, sometimes dangerously). But the direction was clear: LLMs were now in the workflow, not just the classroom or the lab.

And, inevitably, the old questions returned, sharper than before: What does it mean to “understand” something? What if a model gives a perfect answer for the wrong reasons? Can we trust a machine that doesn’t know what it doesn’t know?

Even so, the market moved. New companies and products launched weekly. Google, Meta, OpenAI, Anthropic, and every cloud vendor bet the farm on LLMs as infrastructure, not just an API.

Language models do more than answer questions. They’re quietly shaping how work gets done.

What We Gained and What We Lost

The Double‑Edged Sword of LLMs

If you spend enough time around language models, you start to notice two stories playing out in parallel.

On one side, LLMs have turned once-impossible tasks into afterthoughts. Translating between languages, summarizing legalese, coding up quick scripts, now these are minutes of work. Non‑programmers automate their workflows; writers draft whole essays from a single prompt. Education, accessibility, and research get turbocharged. People who never considered themselves “technical” now wield power once locked up in arcane syntax or enterprise software. In a world drowning in information, LLMs help you synthesize, organize, and produce at speeds that make yesterday’s “productivity hacks” look like folklore.

But on the other, this new fluency brings a new fragility. The same models that answer questions can also hallucinate facts, reinforce biases, or spin confident nonsense. They’re great at mimicking the shape of knowledge, but they don’t know what’s true. Hallucination isn’t a rare bug, it’s baked into how these models guess the next word.

And as LLMs spread, the cost of their mistakes climbs. A model that writes code can introduce subtle bugs; one that drafts legal advice can invent clauses. For every time a model saves hours, there’s a risk of it quietly introducing risk: intellectual, legal, financial, and reputational.

And there’s another, quieter tradeoff: as we automate the grunt work of writing, coding, and summarizing, we risk losing touch with the underlying reasoning. If you can generate a 1,000-word report in a minute, do you still remember how to build a careful argument or check a source? Sometimes, the illusion of understanding is more dangerous than honest ignorance.

What does all this mean? The rise of LLMs didn’t just add power. It shifted who gets to use it and what gets lost in translation.

And now, as these models reshape everything from code to contracts, we face a new set of questions, not just about what’s possible, but about what’s worth keeping. The gains are real, but so are the losses: speed, scale, and convenience traded for new kinds of risk and subtle erosion of skill.

The Future

Where Large Language Models Go From Here

So, what now? In 2025, it’s clear we’re not even close to the end of this story. LLMs are everywhere, but we’re just starting to wrestle with what they’re really good for. And where they might quietly break things we care about.

Models are getting bigger, but “bigger” isn’t the only game left. The research community is pivoting: smaller, more specialized models (think: fine-tuned agents for specific domains) are gaining ground, trading raw power for transparency and lower cost. The era of “just add more data and compute” is giving way to real questions:

- How do we get reliable reasoning, not just surface-level fluency?

- How do we keep user data private when everything is a prompt away from leaking?

- How do we build trust, not just more output?

On the technical front, the biggest changes may not be the ones that grab headlines. Efficient on-device models mean your phone can summarize a call without pinging a data center. Open-weight LLMs challenge the closed-shop approach. Now anyone can run state-of-the-art models, tweak them, and peer inside the black box. And we’re finally seeing real benchmarks for accuracy, safety, and environmental impact, not just leaderboard scores.

But the hard problems are still about people. Teams must decide when to trust a model and when to double-check. Product leaders must balance speed with risk, delight with diligence. And everyone (from writers to engineers to executives) must learn new habits of questioning:

- Is this output actually correct?

- Are we still thinking, or just outsourcing our judgment?

In the end, the story of LLMs isn’t just technical. It’s cultural. We’re all now in a world where language is cheap, but careful thought is rare. The best teams will be the ones that don’t just ask what the model can do. Instead, they focus on what they still need to own.

Don’t Outsource Thinking

Use LLMs to Raise the Bar

Every wave of technology brings hype and hand-wringing. The rise of large language models is no different. But if there’s one lesson from the last ten years, it’s this: tools can amplify what you already do, but they can’t replace the hard work of understanding, challenging, and improving your own thinking.

We built LLMs to help us work faster, smarter, and with fewer roadblocks. If we’re not careful, we’ll settle for faster nonsense. It’s too easy now to hit “generate,” skim the output, and move on. The best teams and builders treat every model response as a draft, not gospel. They poke, prod, and verify. They use models as partners, not oracles.

If you want to build products, content, or companies that last, don’t just chase the latest model update. Double down on clarity: yours and your team’s. When you trust an LLM with the routine, spend the saved time on the parts only humans can do: asking better questions, drawing sharper lines, and deciding what matters most.

So yes, embrace the tools. But keep your hand on the wheel. The future belongs to those who use LLMs to lift the standard, not drop it to the floor.

It’s easy to let the model finish your thought; harder, but more valuable, to keep thinking critically yourself.

In a world where language is now cheap, what will you do to make your thinking rare?

V

Become a subscriber receive the latest updates in your inbox.

Member discussion